Criando helpers cíclicos – Python

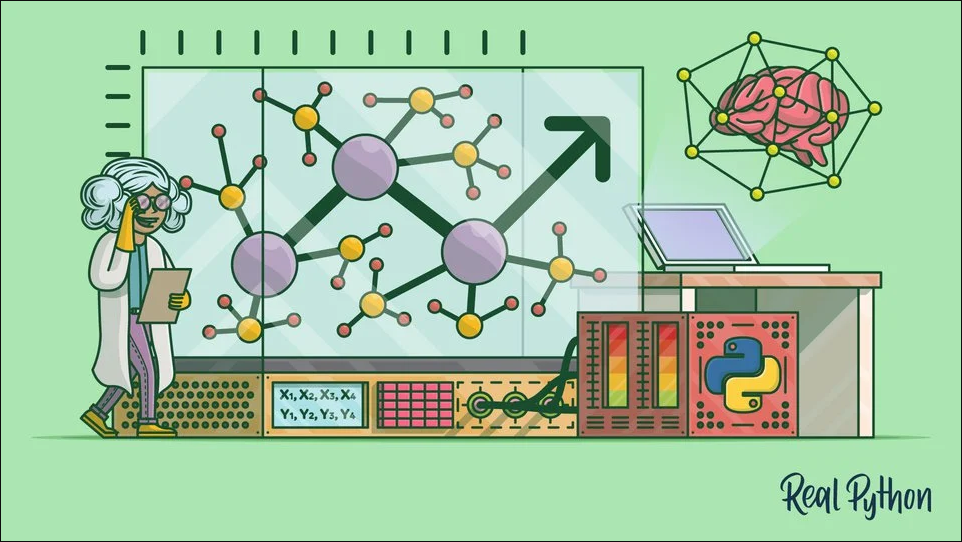

Adapatado do Original: Tray Hunner – Python Morsels Python tip: create looping helpers Python’s for loops are all about looping over an iterable. That is their sole purpose. Since Python’s for loops are more special-purposed than some programming languages, we need to embrace loopers to make our lives easier. Python’s built-in looping helpers are enumerate, zip, and reversed. You could also think of sorted as […]

Criando helpers cíclicos – Python Read More »